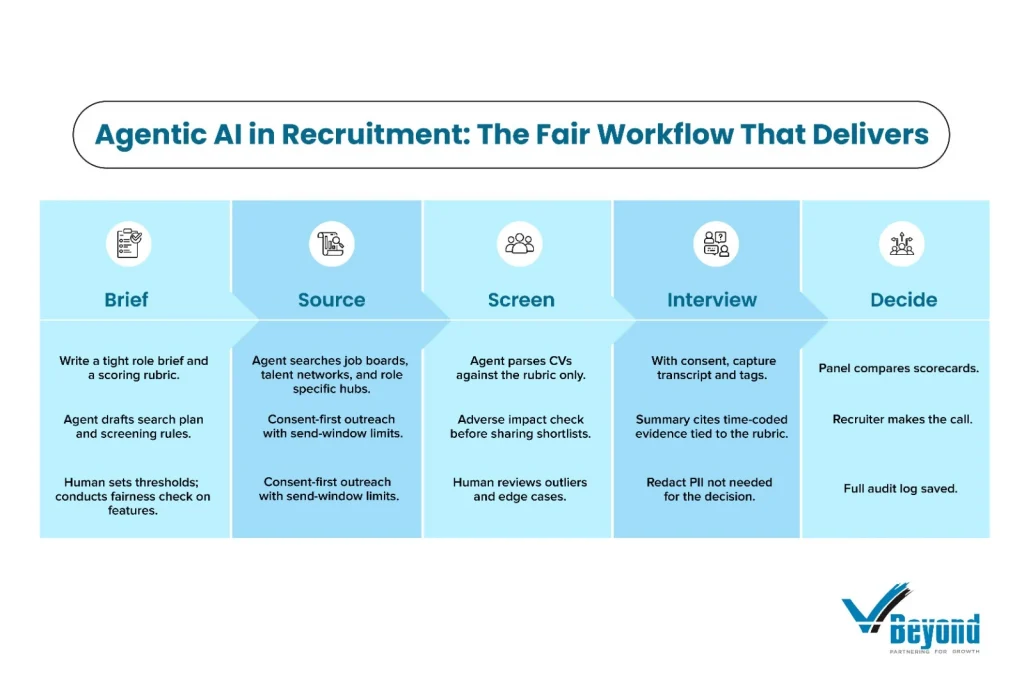

• The blog explains how agentic AI in recruitment can cut hiring cycle time and raise decision quality while keeping fairness and candidate trust at the center. It explains where agents fit across sourcing, screening, and interview notes, with people making final calls.

• Building a clear system design: clean job briefs, rubric-based scoring, an orchestration layer to run agents, policy rules for data and access, and tight links into ATS, HRIS, CRM, and meeting tools. Leaders can roll this out on a small set of roles, measure results, then expand.

• Sourcing improves with multi-source search, consent-first outreach, and audit trails. Agents widen reach without skew, track source mix, and feed ready-to-call leads to recruiters. Metrics include response rate, source diversity, and cost per sourced lead.

• Screening and interviews become consistent and transparent. Agents parse CVs against job-related rubrics, run bias checks before sharing shortlists, and produce interview summaries with time-coded evidence. Recruiters review, compare scorecards, and decide faster.

What “Agentic AI” Means for Hiring

Agentic AI in recruitment is simple to grasp. Think of small software agents that work toward a clear hiring goal. Each agent knows the rules. It can call the right tools, take the next step, and hand work back to people for review. But recruiters stay in charge and make the final decision. IBM and AWS both describe this class of systems as goal-driven agents that operate with limited supervision and coordinate through orchestration.

Here is where these agents fit in a modern funnel. At intake, they turn a job brief into a clean skills rubric. In sourcing, they build search plans and run consent-first outreach. In screening they parse CVs, tag skills, and score candidates against job-related criteria. During interviews they summarize evidence from transcripts with consent and produce clear scorecards. In offer support they help with scheduling, status updates, and basic paperwork. This is AI-based talent acquisition that adds speed without losing judgment.

Adoption for these new tools and methods is rising. LinkedIn’s Future of Recruiting report shows most talent leaders expect AI to speed up work, and a growing share of recruiters now list AI skills on their profiles. SHRM’s trend work also stresses that AI should augment people and requires human oversight in hiring decisions. These signals line up with how agentic AI should run in TA. It removes repetitive steps, keeps people in the loop, and raises throughput without cutting corners.

Think of the agents as the engine behind an automated hiring process that you can audit. Each step uses job-related data, keeps a record of what happened, and flags edge cases for a human to review. Your ATS or an AI-based recruitment platform can orchestrate the flow, so recruiters focus on high-value work: coaching candidates, aligning with hiring managers, and deciding the hire.

This blog will show how AI in recruitment sits across intake, sourcing, screening, interview support, and offer support for IT, BFSI, Healthcare, Manufacturing, Retail, and Pharma. It stays practical and keeps the candidate at the center. When you want deeper guidance, use the companion guide linked at the end for step-by-step setup and design choices.

Ethical Ground Rules for AI in Talent Decisions

Principles to anchor your program

Treat AI in recruitment as a human-led system. Work on four pillars: fairness, consent, transparency, accountability. Use job-related criteria only. Tell candidates when and how AI is used. Keep people in charge at key points. Keep records that show what the system did and why. These points line up with the OECD AI Principles and NIST’s AI Risk Management Framework, which call for transparency, human oversight, and traceability across the AI lifecycle.

It makes AI talent acquisition traceable and turns your automated hiring process into something you can explain and defend. Your ATS or AI-based recruitment platform should log prompts, data used, actions taken, and approvals.

Data limits you should enforce

Scope the inputs on what the job needs. Do not feed in sensitive traits like race, religion, health, or exact location unless the law clearly allows it and you have a valid basis. If you operate in the EU or UK, be mindful that GDPR Article 22 limits solely automated decisions with significant effects and requires safeguards and clear information to individuals. Keep retention periods short and documented. Redact PII that is not needed for hiring.

Build rules so the AI talent pool you create relies on job skills and recent work, not proxies that can skew outcomes. These controls keep automation in recruitment process compliant and fair.

Human review points

Place people to evaluate AI’s data and analyses to guard against biases.

- Role intake: approve the rubric and allowed data.

- Shortlists: review adverse impact checks before a list goes out.

- Final decisions: compare evidence, question outliers, and sign off.

Human oversight keeps AI in recruitment accountable and aligned with policy.

Legal awareness and auditability

In New York City, Local Law 144 requires a yearly bias audit of automated hiring tools, public posting of the audit summary, and specific notices to candidates. The city’s FAQ explains impact-ratio reporting across sex and race or ethnicity and limits on using inferred demographics.

The EU AI Act treats many employment use cases as high risk and requires risk management, data governance, logging, and transparency duties for providers and users.

The EEOC has issued technical materials on AI in selection procedures under Title VII. Employers are responsible if a vendor’s tool causes disparate impact. Keep notices, test for impact, and maintain records that show job-relatedness. Your AI-based recruitment platform should export audit logs on demand. These guardrails are not red tape. They protect candidates, speed reviews, and help your team defend decisions with facts.

Mid Blog CTA: Build Your Hiring Advantage with VBeyond. Contact Us.

Sourcing Agents with Fairness Checks and Audit Trails

What the sourcing agent does

In modern AI in recruitment, a sourcing agent turns a role brief into targeted search strings and scans approved boards and hubs. For tech and data, it looks at GitHub and Kaggle. For Healthcare and Pharma, it checks clinical forums. For BFSI, it searches risk and procurement communities. This is practical AI talent acquisition that builds an AI talent pool from verified, job-relevant sources.

Outreach that earns

The agent drafts consent-first messages, respects frequency caps, and sends within local time windows. It personalizes copy to the role and keeps it short. Recruiters approve templates before they go live as part of an automated hiring process.

Fairness by design

Balance sources so one channel does not dominate. Avoid proxy signals that can skew results. De-duplicate without downranking by school or zip. The goal is a wider AI talent pool with job-related criteria only. These rules keep automation in recruitment process aligned with policy and candidate trust.

What to log for audits

Record the source mix by channel, exact queries used, send times, opt-outs, and response rates. Keep consent records. Your ATS or AI-based recruitment platform should capture these events and export them on request.

KPIs to track

- Sourced candidates per role and per channel

- Response rate to first outreach and within 7 days

- Source diversity ratio across channels

- Cost per sourced lead

Governance, Risk, and Data Security

Good governance makes agentic Ai in recruitment safe, traceable, and ready for audit. Build it on four routine functions. Set policy. Map data and processes. Measure risk and model health. Manage change with clear owners and logs. NIST’s AI Risk Management Framework groups these as Govern, Map, Measure, and Manage, and it is a solid blueprint to follow.

Data map

Write down what you collect, why you collect it, where it lives, who can see it, and how long you keep it. Tag each field as job-related or not. Redact PII that is not needed for hiring. Record consent for anyone added to your AI talent pool and keep a simple process to honor access or deletion requests.

Controls

Use role-based access, least privilege, and logging. Encrypt data at rest and in transit. Rotate keys and secrets. Run incident drills. Review vendors, sub processors, and data transfer paths before any agent connects. Your ATS or AI-based recruitment platform should enforce these controls and keep a full event log so the automation in recruitment processes is visible end to end.

Model health

Check drift and accuracy every month. Re-test rubric scoring on a labeled sample. Track false rejects and pass-through by source and stage. Record every change to prompts, rubrics, models, datasets, and connectors. Keep a rollback plan so you can pause the automated hiring process and fall back to manual steps if needed.

Oversight

Create a small AI review board across TA, Legal, InfoSec, and the business. Meet on a set cadence. Review fairness tests, complaints, and incidents. Approve expansions to new roles or sources. Keep minutes and action items.

Regulatory alignment

AI in employment and hiring use cases often falls in high-risk scope in the EU. Keep risk management, logging, data governance, and transparency duties in place from day one so you can show compliance.

Measure what proves speed, quality, fairness, experience, and cost. Keep definitions tight. Track them weekly. Review them at a fixed cadence with TA, HR, and business leads.

Metrics that matter

Track the right numbers to prove that AI in recruitment is working. Keep definitions tight. Review them weekly with TA, HR, and business leads.

Speed

- Time to shortlist: Days from role intake approval to first qualified shortlist.

Formula: Date of first shortlist minus date of approved brief. - Time to hire: Days from job post to accepted offer.

Formula: Offer accept date minus post date. - Recruiter hours saved: Manual hours avoided by agents for sourcing, screening, and notes.

Method: Baseline a manual week, then compare after agents go live.

AI can cut time to fill by about 40 percent in some settings, which ties directly to revenue timing and project starts. This reflects mature AI talent acquisition supported by an automated hiring process.

Quality

- Pass-through by stage: share of candidates moving from shortlist to interview to offer.

Formula: count advanced at stage ÷ count entered stage. - Quality of hire at 90 days: composite of hiring manager rating, early performance proxy, and retention.

Method: choose 2 to 3 job-related indicators per role, use a simple 0 to 100 scoring sheet, and track trend by channel and recruiter. - Hiring manager satisfaction: post-fill score on shortlist relevance and interview quality.

Your ATS or AI-based recruitment platform should surface these metrics in a single view.

Fairness

- Impact ratio by stage: selection rate of one group divided by the selection rate of the highest-scoring group.

Guardrail: investigate if any group’s rate falls below 80 percent of the top group’s rate. Check this at sourcing response, screening pass, interview pass, and offer. Keep logs and corrective notes so automation in the recruitment process is explainable. - Candidate experience

- Candidate NPS: ask after the screening outcome and after the final decision.

- Reply time: hours from candidate message to first reply.

- Status latency: days in stage without an update.

Use these signals to improve outbound messages to your AI talent pool and reduce drop off.

Cost

- Cost per hire by channel: total spend for the role divided by hires from that channel. Include ads, tools, agency fees, assessments, and recruiter time.

- Cost per sourced lead: total sourcing spends divided by leads that pass a minimum screen.

- Tool ROI: hours saved multiplied by loaded hourly cost minus tool spend.

How to act on these numbers

- If time to shortlist slips, tighten the rubric and raise intake quality before adding more sources.

- If pass-through stalls at screening, review job-related criteria and re-test scoring on a labeled sample.

- If the impact ratio trips the 80 percent check, pause automation at that stage, review features, and correct the data or rubric.

- If candidate NPS drops, shorten reply-to times and add brief feedback templates for rejections.

- If cost per hire rises, shift budget to channels with stronger pass-through and better NPS.

Conclusion

Agentic AI offers a practical way to scale recruiting without compromising standards. By embedding purpose-built agents across intake, sourcing, screening, and offer support, teams gain both speed and clarity while ensuring final decisions are ethical and made by people. Each step becomes more transparent, more consistent, and easier to trace. That’s not just automation, but accountability built into the hiring process.

For talent leaders, the shift is already underway. The organizations moving first aren’t just filling roles faster. They’re designing hiring systems that are fair by default, audit-ready by design, and able to adapt as laws, tools, and expectations evolve. Agentic AI isn’t a future trend. It’s the infrastructure for hiring that’s already being adopted in 2025, and when built ethically and with intent, can hold up under scrutiny.

Ready to move fast without cutting corners? Contact VBeyond to get your pilot underway.

FAQs

How is AI used in recruitment today?

AI in recruitment supports intake, sourcing, screening, interview notes, and offer support. Agents draft search plans, parse CVs against a rubric, schedule interviews, and produce concise summaries. A modern AI-based recruitment platform or ATS orchestrates these steps into an automated hiring process while people make final decisions.

Does AI in recruitment reduce hiring bias?

It can when built and run correctly. Use job-related data only, test impact by stage, keep audit logs, and require human review before shortlists and offers. With these controls, AI talent acquisition and automation in recruitment process help reduce noise and improve consistency across decisions.

How do AI-powered interviews work on mobile?

With consent, the app records the call, transcribes it, and tags notes to the role rubric. The agent drafts a summary with time-coded evidence. Recruiters review on the go, edit as needed, and save to the ATS or AI-based recruitment platform.

How can agentic AI sourcing work on Android devices?

Recruiters trigger a sourcing agent from the mobile app, review queries, and approve outreach templates. The agent builds an AI talent pool, sends consent-first messages in local time windows, and alerts recruiters to replies. All actions post back to the ATS so automation in recruitment process stays traceable.

What are the benefits of ethical agentic AI in hiring?

Faster time to shortlist and hire, consistent screening, better candidate communication, and audit-ready records. Teams spend more time with finalists and less on repeat tasks. In short, ethical AI in recruitment turns routine steps into a reliable automated hiring process that improves outcomes across roles and hiring models.