Key Takeaways

• Build leadership literacy before scale. Strategic fluency, not speed, will determine who can deploy AI responsibly and align it with business models, investor expectations, and governance

• Invest in workforce imagination. Competitive advantage will stem from cognitive adaptability: training employees to interpret, challenge, and co-create with intelligent systems.

• Treat culture as the collaboration protocol. Sustainable adoption depends on traceability, ethical discipline, and shared behavioral

Work is being rebuilt in real time, reshaped by the growing presence of AI in the workplace. AI is entering teams, processes, and decisions that once depended entirely on people. What matters now is how that mix changes the rhythm of work itself.

For the workforce, the shift is practical: what skills hold value, how teams share judgment with machines, and how confidence is maintained when part of the task is invisible. For leadership, it is structural: how to shape roles, learning, and accountability so that both sides of intelligence—human and artificial—work in step.

The next phase of productivity will come from designing that relationship well. Human-AI collaboration is the new texture of work.

The Emerging Shape of Agentic AI Adoption

Capgemini, in its report Rise of agentic AI, describes agentic AI as a defining technological shift — systems that move beyond traditional, task-based AI to manage end-to-end processes with minimal human intervention. According to the report, these agents are evolving “from tools to team members,” marking a new phase in enterprise productivity and growth. Given the agentic AI adoption trajectory across the surveyed countries, the firm projects that AI agents could generate up to $450 billion in economic value by 2028, combining revenue gains with cost savings. Competitive momentum is accelerating, as more than 90% of business leaders believe that organizations able to scale agentic AI within the next year will outperform their competitors.

Even so adoption remains fragmented. According to the report, only 14% of companies have implemented AI agents — 12% at partial scale and 2% at full scale — while 23% have launched pilots and another 61% are in the exploratory phase, preparing for deployment. Most enterprise AI agents operate at low levels of autonomy; just 15% of business processes are expected to reach semi- or fully autonomous levels in the next 12 months, rising to 25% by 2028. Notably, Capgemini emphasizes that AI agents can still deliver measurable value even at intermediate levels of autonomy, provided they are embedded within effective human-supervised systems.

The study also highlights a growing trust deficit. Confidence in fully autonomous AI agents has fallen from 43% to 27% over the past year, reflecting a shift from initial optimism to pragmatic caution. Capgemini attributes this decline to unresolved ethical issues (implying AI agent governance) such as data privacy, bias, and algorithmic opacity, combined with limited organizational readiness: fewer than one in five companies report high data maturity, and over 80% lack the infrastructure to scale agentic systems effectively. At the same time, 61% of organizations report increasing employee anxiety about job displacement, even as few are investing in reskilling. The report concludes that sustainable adoption will require redesigning processes, transforming workforce structures, and striking a deliberate balance between agent autonomy and human judgment.

According to Gartner (2025), enthusiasm for agentic AI is colliding with operational reality. More than 40% of agentic AI projects, will likely be discontinued by 2027, driven by rising costs, weak business cases, and poor risk controls. Analysts warn that many initiatives are driven by hype rather than need – a wave of “agent washing,” where legacy automation tools are rebranded as agentic AI. As per Gartner’s estimates, just about 130 vendors currently have genuine agentic capabilities. While long-term potential remains significant (with 15% of work decisions expected to be autonomous by 2028), the firm cautions that near-term value will depend on disciplined deployment (implying effective AI readiness assessment), clear ROI, and realistic expectations.

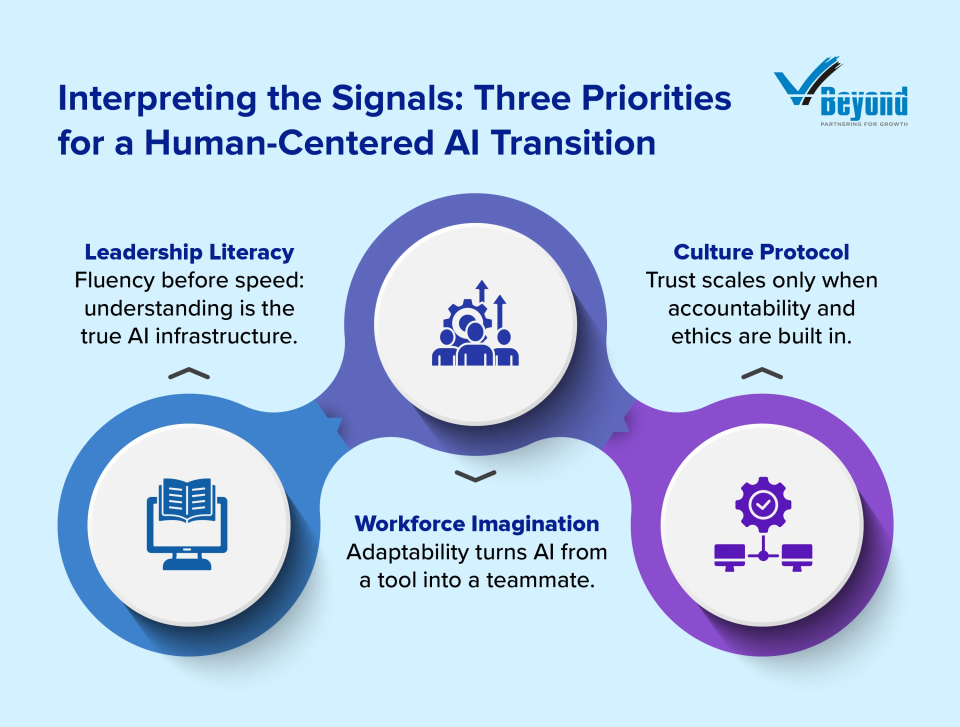

Interpreting the Signals for Future of Work with AI: Three Priorities for a Human-Centered Transition

Capgemini and Gartner, and McKinsey in One year of agentic AI: Six lessons from the people doing the work, all point to a pivotal moment for agentic AI — a technology advancing faster than trust, readiness, or clear business models. Their findings underscore the urgency of disciplined execution and human-AI collaboration. Building on those insights, we interpret the emerging picture through a different lens: what this transition means for people inside organizations. The path forward will not hinge only on data maturity or cost control, but on how leadership, workforce capability, and culture evolve to work alongside intelligent systems. Three priorities stand out:

l. Leadership Literacy Is the New Digital Infrastructure

Agentic AI is moving faster than most governance models can adapt. The temptation in many boardrooms is to greenlight pilots in every business unit, often under pressure to appear technologically forward. But literacy—not speed—is what separates early adopters from early casualties. Before any deployment, leadership teams must build a precise understanding of how AI agents interact with the company’s business model, capital structure, and operational risk profile.

The starting point is a strategic diagnostic AI readiness assessment, not a technical roadmap for AI in the workplace. Executives should ask: Which parts of our value chain depend on judgment, and which on execution? Where does autonomy amplify outcomes, and where does it erode control? A consumer goods firm, for instance, might find that agentic AI adoption enhances forecasting accuracy but destabilizes supplier negotiations if autonomy thresholds aren’t defined. Mapping these dependencies early prevents the false efficiency of automation without accountability.

Next comes alignment with investors and governance bodies. Deploying intelligent systems without clear oversight triggers reputational and regulatory exposure. Boards must understand what level of autonomy is being introduced, what data governs it, and how outcomes will be validated. Investor confidence now depends as much on the clarity of AI agent governance as on quarterly results. A board that can articulate the strategic intent, guardrails, and ethical posture of its AI initiatives earns trust before results are visible.

Once alignment is secured, leadership must develop agentic literacy across the executive layer. This doesn’t mean technical mastery, but conceptual fluency: understanding the logic, limits, and escalation points of AI-driven decision-making. Workshops, simulations, and cross-functional reviews help leaders anticipate how autonomous systems might behave under uncertainty. The aim is to normalize questioning the system, not blindly following it.

From there, organizations can move toward readiness testing — controlled pilots that test both technical and managerial responses. This phase is where literacy turns into judgment: when to override, when to escalate, and when to let the system learn. Successful enterprises don’t measure readiness by model accuracy alone, but by how clearly decision rights, accountability, and human oversight are defined.

Finally, continuous evaluation must become part of the leadership rhythm. The executive team should institutionalize periodic reviews of agentic initiatives against strategic and ethical benchmarks. AI maturity will not be measured by the number of models in production, but by the organization’s capacity to think critically about them.

Leadership literacy, in short, is the modern equivalent of infrastructure. Without it, AI strategies and AI readiness assessments remain surface-deep: impressive in presentation, fragile in execution. With it, organizations create conditions for scalable agentic AI adoption, trust, and enduring value creation.

ll. Workforce Imagination Is a Competitive Advantage

Once leadership clarity is established, the next frontier is capability; this is not just technical upskilling, but cognitive and creative adaptability for building an AI-augmented workforce. Agentic AI changes the texture of everyday work: decisions become distributed, feedback loops shorten, and human contribution shifts from repetitive execution to interpretation, curation, and supervision. In this transition, the defining organizational strength will be workforce imagination: the ability of employees to adapt, learn, and collaborate with systems that think and act in unfamiliar ways.

Most organizations approach reskilling as a compliance exercise, updating technical skills to keep pace with new tools. That model holds little relevance now in the context of AI in the workplace. Agentic systems learn and evolve; so must the people who interact with them. Companies need layered learning architectures that combine technical literacy with behavioral agility. Technical modules should focus on what AI agents do and don’t do: how they make decisions, what constitutes a valid override, and where human review is critical. But this must be paired with learning experiences that build creative problem-solving and comfort with ambiguity. The most effective programs blend human and system learning, training people to question model outputs, synthesize insights, and improvise responsibly when data alone cannot decide.

Implementation should begin where impact is most visible. Instead of trying to reskill the entire workforce at once, organizations can identify high-influence workflows, areas where humans and AI collaborate closely, such as customer service triage, quality review, or financial risk assessment. These “learning zones” serve as micro-labs to test new interaction models. Employees involved in these pilots should be encouraged to document insights: where the agent improves efficiency, where it introduces friction, and where human judgment remains irreplaceable. The goal is to create institutional memory, a playbook for human–AI co-work that can scale gradually, based on lived experience rather than theoretical models.

To sustain engagement, communication is as critical as training. Many employees see AI as an opaque force that threatens autonomy or job security. Silence from leadership reinforces that anxiety. The antidote is transparent dialogue, explaining not only what AI agents do, but why they’re being deployed, and how success will be measured. Organizations that narrate this journey openly find their teams more willing to experiment, question, and contribute to the process. The best AI transformations, in practice, are social movements inside the company: they turn skepticism into shared curiosity.

A vital but often overlooked element is redesigning roles for hybrid intelligence. For instance, a manufacturing enterprise could pilot agentic AI in quality control by pairing inspectors with autonomous diagnostic agents. These agents wouldn’t just flag anomalies; they would trace potential root causes across production lines, cross-reference historical data, and recommend corrective actions in real time. The inspectors’ task would shift from validation to supervision: reviewing the agent’s reasoning, approving interventions, and refining its decision logic through structured feedback. This approach transforms quality teams into co-architects of machine intelligence, embedding human judgment directly into the agent’s learning loop. It’s how a workforce moves from being supported by AI to actively shaping it.

Finally, companies must measure adaptability, not just output. Metrics should move beyond output or efficiency to capture organizational responsiveness: the time it takes to detect a deviation, recalibrate an agent, and translate that learning across teams. In practice, this means designing for visibility: every agent interaction, human intervention, and outcome forms part of a shared feedback network. The real measure of adaptability isn’t how many people can use the system, but how quickly the system — humans included — improves because of them.

Workforce imagination is an economic differentiator. As intelligent systems absorb more procedural work, value creation will move toward how humans interpret, integrate, and evolve alongside them. Companies that invest in imaginative capability will future-proof their capacity to innovate.

lll. Culture as the Collaboration Protocol

As agentic AI moves from isolated pilots to enterprise infrastructure, culture becomes the invisible operating system that determines whether it scales responsibly or fractures under pressure. Technology alone cannot sustain trust in autonomy. What holds it together is a culture that makes human-AI collaboration predictable, transparent, and explainable, not just technically, but behaviorally.

Building that culture starts with defining what shared work means in a hybrid system. Every organization deploying agentic AI should establish a simple principle: autonomy is permitted only when accountability is clear. Teams must know who supervises the agent, who validates its output, and how escalation works when something goes wrong. Formalizing these rules early – in policies, role descriptions, and performance metrics – prevents ambiguity once systems are live.

The next layer is designing for traceability and psychological safety. Employees should be able to question an AI recommendation without penalty. The moment human challenge disappears, oversight does too. Organizations can build confidence by creating audit trails that show how an agent arrived at a decision, who reviewed it, and how feedback was incorporated into its logic. This transparency doesn’t slow work; it reinforces the integrity of the entire ecosystem.

A parallel priority is institutionalizing feedback as a two-way discipline. In most enterprises, data flows upward and authority flows downward; agentic systems disrupt both. To harness that disruption productively, companies should formalize continuous review loops: structured forums where cross-functional teams evaluate agent performance, surface anomalies, and agree on recalibration. This makes AI governance part of everyday management, not a quarterly compliance ritual.

Equally critical is setting behavioral norms for collaboration. When humans work with AI agents, subtle shifts in tone, trust, and dependency emerge. Some employees over-defer to machine outputs; others dismiss them. Both behaviors create risk. Leadership must define expected interaction standards: when to verify, when to rely, and when to override. Training programs can simulate these scenarios, giving teams language and confidence to engage constructively with autonomous systems.

Finally, culture must anchor in ethics as an active practice, not a principle. Ethical posture cannot live in policy documents; it must live in daily behavior. Before every major deployment, leaders should run a structured ethical review: Who benefits? Who might be excluded? How traceable is the outcome? Embedding such rituals into the rhythm of decision-making keeps human values at the center of machine-driven work.

Culture, in this context, is the precondition of successful adoption. A strong cultural protocol gives humans the confidence to work with autonomy and gives systems the boundaries to act responsibly. As agentic AI becomes a core member of the enterprise workforce, trust and traceability will be the new levers of performance in human-AI collaboration.

Conclusion

Agentic AI is revealing how organizations truly think. Once systems begin to act, not just calculate, the blind spots in human-AI collaboration/coordination surface fast: the indecision disguised as consensus, the data we collect but never use, the rules we enforce but don’t understand. The real test is not how much judgment we can automate, but whether our own judgment deserves to be automated at all. The next phase of progress will belong to organizations that rebuild their reflexes, namely, how they learn, how they trust, and how they decide what matters, with the same precision they once reserved for process and profit.

For a deeper dive into implementation frameworks, explore our guidebook on Agentic AI – Agentic AI in Recruitment: Building Ethical Talent Workflows that Scale.

As AI reshapes the workforce, the advantage will belong to organizations that know how to blend human capability with intelligent systems. Partner with us to build the talent architecture your future demands.