As artificial intelligence becomes central to modern defense, the real disruption isn’t just technological—it’s human. This blog explores how defense organizations and professionals must adapt talent models, leadership mindsets, and operational structures for an AI-first era.

1. From Hiring to Foresight: Defense organizations must move beyond traditional recruiting and begin shaping hybrid, AI-integrated roles that align with evolving mission needs. It’s not about filling vacancies—it’s about forecasting capabilities before they’re needed.

2. Human-AI Collaboration is the New Doctrine: AI may drive speed and precision, but decision-making, context, and ethics remain human. Integrating AI means reengineering trust, training personnel for oversight, and embedding human judgment in the loop—across warfighting, logistics, and cyber defense.

3. Redefining the Candidate Archetype: Success in this domain demands range—technical fluency, mission literacy, ethical clarity, and adaptability. Candidates must prepare for roles that don’t yet exist and build cross-domain insight that mirrors how AI systems function across sea, air, land, and cyberspace.

Introduction

AI is changing defense strategy. It is changing how wars are fought and who fights them.

From drones to decision-support systems, artificial intelligence is reshaping military operations. But the deeper disruption is human: defense roles, hierarchies, and talent models are being rewritten in real time.

AI readiness now hinges on more than firepower. It demands people who can build models, question data, and make ethical calls in chaotic environments. The global AI race is colliding with the demands of national security, intensifying competition for defense talent, and few institutions are moving fast enough.

This piece explores a core question: How AI in defense systems — and the defense talent pipelines behind them — must adapt to an era defined by machine intelligence and human judgment? Because the stakes are immediate.

AI and the Transformation of Defense: A Global Boom on the Horizon?

Artificial Intelligence is increasingly transforming from a side experiment in defense to becoming the backbone. From battlefield tech to back-office operations, AI in defense is reshaping not just what militaries deploy, but how they work.

Conversations across platforms like LinkedIn and X show that one trend is clear: algorithmic decision-making, intelligent automation, and data-driven command systems are fast moving from fringe to foundational. The future of defense work is being rewritten in real time.

According to TimesTech (April 2025), the AI defense market is set to surpass $178 billion by 2034, growing at an annual rate of over 30%. This is structural. AI is redefining how missions are planned, resources allocated, and future forces trained. Nations are racing to embed AI across every domain: land, air, sea, cyber, and space. And with it comes soaring demand for hybrid roles and systems, and human-AI integration, such as human-machine teaming architectures.

North America is leading this shift, projected to reach $78 billion, bolstered by a strong defense innovation ecosystem and NATO collaboration. Within the U.S., AI investment by the Department of Defense has more than doubled, up from $874 million in FY2022 to $1.8 billion in FY2025 (Frost & Sullivan, May 2025). This surge is fueling new capabilities in simulation, threat detection, and cognitive warfare: not just multiplying force, but defining it.

Meanwhile, Asia-Pacific is the fastest-growing region, driven by major investments from China, India, Japan, and South Korea. Europe, led by the UK, France, and Germany, is advancing ethical AI and interoperability frameworks. And while LAMEA nations, notably in the Middle East, Brazil, and South Africa, have smaller AI bases, their adoption is accelerating to meet regional security challenges.

Strategic rivalries are turning AI into a new arena of advantage. From logistics and reconnaissance to cybersecurity AI for cyber resilience and decision speed, AI in defense is no longer a luxury, but a necessity.

Major defense firms are already operationalizing this shift:

- BAE Systems deploys AI in autonomous vehicles and drones.

- Palantir powers U.S. Army ops with battlefield data fusion.

- Lockheed Martin’s AI Factory embeds machine learning into drones and missile defense.

- Raytheon, Thales, and IBM are advancing AI in surveillance, logistics, and secure data platforms. (Source: 15 Examples of AI in the Military and Defence Sector Industry in London, UK, Europe, and the USA (2024-2025) – IoT Magazine)

At the same time, a new generation of AI-native startups is accelerating innovation. Some of these are: U.S.-based Anduril, which builds autonomous drones and battlefield platforms. EdgeRunner AI, for instance, is pioneering air-gapped generative systems for satellite defense. DEFCON AI supports logistics simulations, while EnCharge AI, backed by DARPA, focuses on energy-efficient processors. In Europe, Helsing leads with software-defined combat systems and AI-driven strike drones. (Source: 5 Startups Developing AI for Defense Application – Mobility Engineering Technology)

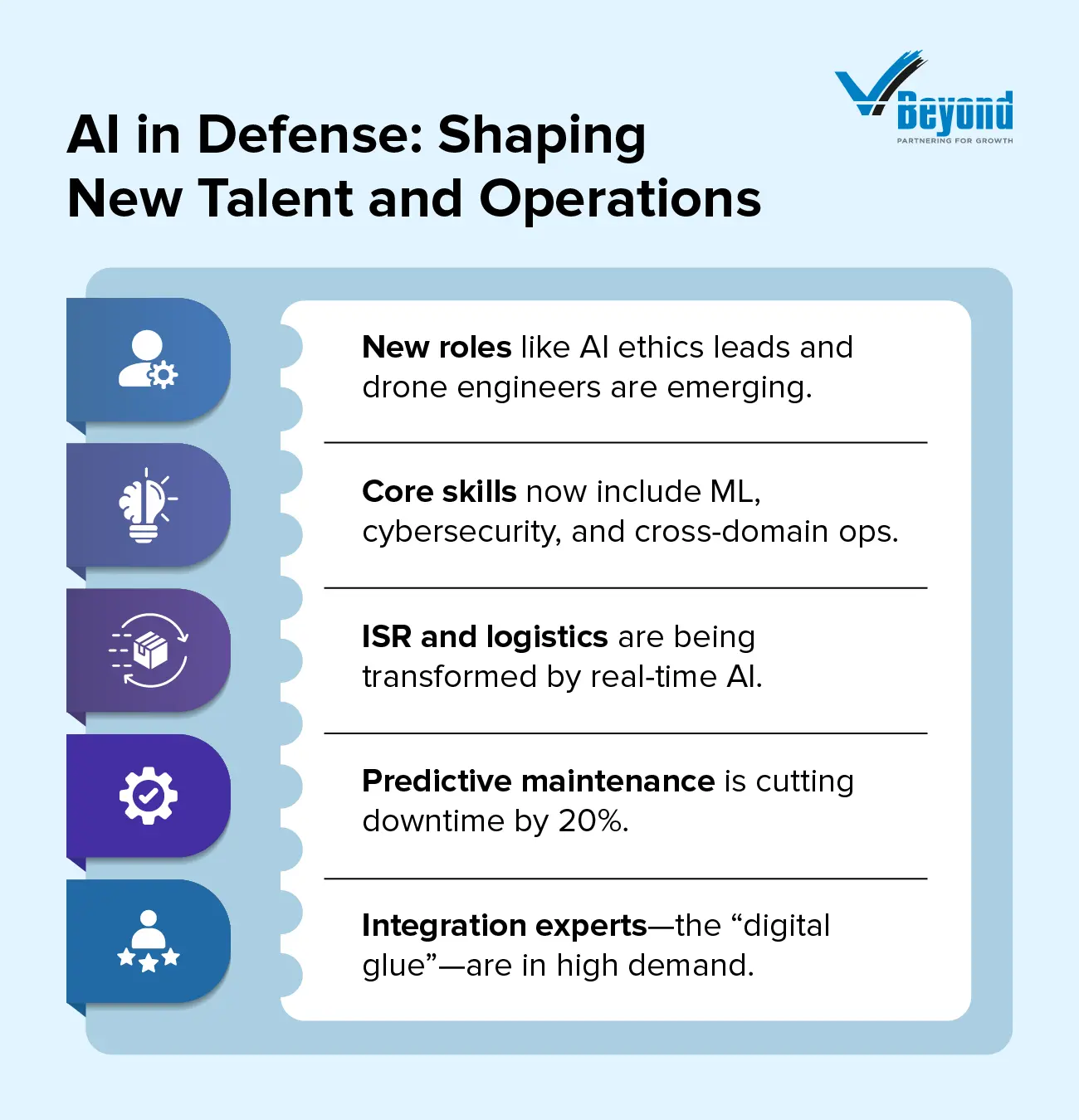

This wave of defense innovation is triggering a parallel shift in workforce demands, accelerating workforce transformation. Technical roles like machine learning engineers, cybersecurity-AI specialists, and AI ethics leads are growing rapidly. These aren’t plug-and-play jobs; they require deep proficiency, fluency with complex data environments, and the ethical judgment to operate in high-risk scenarios.

Even the military is reorganizing. The U.S. Army’s Task Force Lima and the creation of MOS 49B—a formal AI-focused military occupation—signal a broader institutional shift toward embedding AI at the unit level. For private firms, this is a preview of the competitive pressure coming fast — one that demands upskilling defense teams for greater mission fluency in AI-integrated operations.

Industry-wide discussions reveal a sharp AI-driven shift in defense recruiting. Job postings have surged 70% since 2023, as conversations on social and professional platforms indicate, with growing demand for AI engineers, data scientists, and AI ethics leads in autonomous systems and threat detection. New roles like AI model auditors point to rising compliance needs. Recruiters are leaning on platforms like Eightfold AI to cut sourcing time for critical skills by 30%. With U.S. pipelines under pressure, firms are hiring internationally and partnering with UK, Australian, and bootcamp programs. Upskilling defense teams is urgent too—DoD mandates aim for 50% AI literacy by 2026, with training in TensorFlow and Zero Trust gaining priority. To compete, companies are boosting pay above tech norms and expediting clearances—now a chokepoint, as 80% of roles require them.

This transition, as any other, is not seamless. AI systems struggle with transparency and integration into aging infrastructure. Ethical AI questions loom large, especially in autonomous targeting. And the talent pipeline remains narrow, competing head-to-head with Big Tech.

Defense is in the midst of a structural realignment, inching towards a boom perhaps. AI is working as a catalyst for rethinking capability, leadership, and readiness. For defense organizations, the challenge is deploying AI where it counts; for candidates, it’s becoming the defense talent that makes that possible.

Applications of AI in Defense: Driving Workforce Transformation, Operations, and Organizational Readiness

As artificial intelligence (AI) transforms military operations, it is also reshaping the expertise needed to As artificial intelligence (AI) transforms military operations, it is also reshaping the expertise needed to design, deploy, and govern these systems. AI’s expansion into predictive analytics, autonomous platforms, cyber defense and predictive maintenance is fueling demand for technical roles that fuse data fluency with mission literacy.

According to PwC’s 2025 report The Global AI Race and Defense’s New Frontier, AI has become a core engine of strategic and operational decision-making. This shift is giving rise to new professional archetypes:

- Predictive Analytics Specialists, who convert complex data into foresight for mission planning.

- Autonomous Systems Engineers, who design and maintain AI-enabled vehicles and drones for reconnaissance, logistics, and tactical support.

- AI Ethics and Compliance Managers, who set governance protocols to ensure systems remain accountable and operationally safe.

Supporting these roles are essential skill sets:

- Machine Learning Model Development for building adaptive algorithms that enhance real-time awareness and responsiveness.

- Cybersecurity AI Integration to defend networks against evolving digital threats.

- Multi-Domain Systems Expertise to synchronize AI across land, air, space, and cyber—especially where legacy systems still dominate.

Conversations across industry forums and platforms this shift. X users, in July 2025, for instance, highlight a surge in demand for machine learning experts building algorithms for autonomous targeting and navigation. Data scientists are increasingly central to ISR (intelligence, surveillance, reconnaissance) operations, transforming raw inputs into field-ready intelligence. Predictive maintenance roles are also rising, with AI-driven equipment monitoring reducing downtime by 20% in critical systems. One recurring theme is the value of professionals with cross-domain integration skills—often called “digital glue”—for aligning AI tools with traditional platforms like radar systems and communications networks.

Together, these roles and skill sets signal a fundamental pivot: the defense ecosystem now depends less on what systems can do, and more on who can govern, adapt, and integrate them. So, from a workforce transformation and evolution perspective, it is not a redefinition of military talent for an AI-first operational future.

Human-AI Integration in Defense Operations

Human-AI integration constitutes the texture of defense today. Across domains, AI is accelerating decisions and enabling autonomy, but its real power lies in what it doesn’t replace: human intuition. The emerging model is one of collaborative intelligence: machines operating at scale and speed, humans anchoring context, strategy, and moral judgment.

As the PwC 2025 report underscores, AI can surface patterns buried in vast volumes of battlefield data, but only humans can determine what matters and what action it warrants. Defense professionals, going by their conversations, mirror this rhythm: commanders consult AI-generated simulations, but decisions are ultimately shaped by human teams. The consensus is firm – AI may inform, but it cannot decide. Not alone.

This dynamic is already visible across key mission domains. In predictive decision-making, AI models forecast enemy movement in real time, while human leaders interpret those predictions in light of political, environmental, and mission-specific complexities. In collaborative autonomy, robotic vehicles and drone swarms execute tactical objectives, but rely on human oversight to intervene when algorithms hit uncertainty. In logistics, AI helps prioritize supplies and personnel amid shifting battlefield conditions, but operational judgment remains a human responsibility. Training simulations, too, are increasingly AI-generated—immersive, adaptive, and realistic—but human input ensures they reflect not just technical accuracy, but ethical and contextual relevance. Even in predictive maintenance, where AI anticipates equipment failure through sensor data, human validation is essential to avoid false positives that could delay or derail missions.

But integration also brings new burdens. AI systems, especially those deployed in autonomous targeting, introduce complex questions around accountability and ethics. As PwC cautions, humans must remain the ethical gatekeepers: responsible for interrogating outputs, detecting bias, and ensuring compliance with humanitarian and operational norms. Trending industry conversations, too, warn against treating AI as a plug-and-play tool; instead, they call for broader ethical literacy within the force, especially as AI grows more opaque and embedded in decision loops.

And while the vision of synergy is compelling, execution remains uneven. Many personnel still lack the training to interpret or challenge AI outputs. On X, defense voices call for scenario-based learning that builds not just technical skill, but operational instinct—knowing when to trust AI, and when to override it. Technical fragility is another weak link: AI can falter in unfamiliar environments, misclassify threats, or make inferences from incomplete or misleading data. Legacy infrastructure compounds the issue. Older command-and-control systems often can’t fully support AI integration, creating friction that only cross-domain experts, fluent in both legacy and modern systems, can resolve.

Human-AI integration is about reengineering trust, not surrendering control. It requires rethinking roles, retraining teams for mission fluency, and embedding ethical AI oversight at every layer of deployment. Machines may accelerate the mission, but people still carry its weight.

Rethinking Talent Architecture: Beyond Traditional Recruiting for the AI-Driven Defense Era

As AI in defense rewrites the shape of conflict, organizations must redesign not just who they hire, but how defense talent itself is defined.

AI doesn’t follow the slow arc of procurement cycles or five-year workforce planning grids. Its pace is exponential, its edge unpredictable. Even the most resilient institutions are now being challenged not on their strength, but on their strategic adaptability. What follows next aren’t steps because defense leaders need instruction. These cues are designed not to prescribe, but to help frame foresight. To shift talent thinking from reactive hiring to proactive architecture, from filling roles to shaping capabilities before the mission calls for them:

1. Create Mission-Linked AI Hybrid Roles

Instead of simply hiring more AI engineers, defense organizations can create hybrid roles that combine mission fluency with advanced technical depth. Examples include:

- AI-ISR Fusion Officers: Personnel who combine intelligence surveillance and reconnaissance (ISR) expertise with AI model tuning and validation.

- Autonomy Field Test Leads: Tactical operators trained in testing AI-enabled unmanned systems under real-world constraints.

- Ethical Mission Validators: Professionals who not only understand bias and explainability in models, but also apply that to mission environments (e.g., targeting, surveillance, or autonomous decision-making contexts).

2. Operationalize AI-First Warfighting Functions

Recruiters could anticipate and seed emerging domains like:

- Cognitive Electronic Warfare Analysts: Experts using AI to simulate, predict, and counter adversary communications and jamming strategies.

- Human-AI Teaming Designers: Specialists who architect workflows between human personnel and AI agents across battlefield, logistics, or intelligence functions.

- Model Security Engineers: Focused on protecting deployed AI systems from adversarial manipulation or poisoning—an increasingly real threat vector.

3. Embed Talent Within DevSecOps Teams

Move beyond static job descriptions by embedding talent into mission-aligned, cross-functional pods that include AI engineers, military strategists, field testers, and logistics planners. This shortens the feedback loop between model design and deployment, and allows recruiters to scout for dynamic profiles that don’t fit siloed categories.

4. Prototype Talent-as-Infrastructure

The most innovative defense organizations won’t treat talent strategy as a support function—they’ll build talent architectures like they build platforms. This means:

- Creating “AI war colleges” to train internal personnel on generative AI, autonomy ethics, and simulation design.

- Funding dual-track career paths that allow data scientists or engineers to rotate between lab, deployment, and policy teams.

- Building digital twin platforms not just for vehicles or systems, but for capability forecasting—to simulate future workforce needs against tech trajectories.

5. Recruit for Adaptability, Not Just Expertise

AI systems evolve fast—and so should the people managing them. Roles like AI System Stewards (model explainability and tuning in deployed environments) or Dynamic Mission Configurators (adapting AI-enabled platforms to new geographies or adversaries) require adaptability, learning velocity, and strategic empathy—not just code fluency.

Compete Globally with Strategic Incentives

To stay ahead in the AI talent race, defense organizations are offering salaries that exceed tech-sector averages by 12% and are pushing to fast-track security clearances, a critical bottleneck, with 80% of AI roles requiring them. But compensation alone isn’t enough. As global competition intensifies, particularly from non-allied states aggressively scaling their AI workforces, organizations must think beyond borders. Expanding international pipelines, crafting mission-aligned career incentives, and tapping into shared security ecosystems are becoming essential tactics when national advantage hinges on who gets to the right talent first.

What Do Trending Conversations Say?

Recruiters, technologists, and defense insiders are voicing the same urgency: AI has upended traditional hiring, and the defense sector is scrambling to keep pace. Threads highlight a surge in postings for hybrid roles, or profiles that merge tactical awareness with technical fluency, as organizations seek candidates who can not only build models but also apply them responsibly in mission-critical environments. Tools like Eightfold AI are gaining traction, but even automation can’t fix the deepest constraints: clearance bottlenecks, global talent shortages, and the steep learning curve of aligning AI skills with defense culture. Upskilling is a recurring theme, with training in TensorFlow, Zero Trust, and explainability now seen as prerequisites, not differentiators. And as companies raise salaries and accelerate pipelines to compete with Big Tech, one insight that emerges is: defense is not just competing for talent, redefining what talent means.

AI in Defense Recruiting: A Candidate’s Roadmap

If AI in defense sector is racing to redefine talent, then candidates are stepping into hybrid roles that didn’t exist a few years ago, and may not have clear templates today. AI’s expansion across autonomy, cyber, and predictive systems is rewriting what operational and mission fluency look like. For those looking to enter or pivot into this space, success will depend less on ticking boxes, and more on developing range: the ability to span code and command, innovation and AI ethics, lab and field.

Below is a roadmap, not to follow blindly, but to adapt as the AI in defense domain evolves:

1. Develop Dual Fluency: Tech and Mission

Success in defense AI roles increasingly demands bilingual thinking: understanding both the technical backbone (e.g., ML pipelines, sensor fusion, simulation models) and the mission context (e.g., ISR, logistics, command-and-control).

- Build baseline fluency in AI tools like TensorFlow, PyTorch, and Hugging Face transformers.

- Pair it with contextual awareness—via military doctrine primers, strategic studies, or wargaming simulations.

Tactic: Enroll in interdisciplinary programs or bootcamps that combine AI with defense systems, or pursue certifications through institutions like AFCEA, INSA, or NATO’s DEEP AI programs.

2. Aim for Roles That Don’t Exist Yet

Some of the most impactful AI roles in defense today didn’t exist five years ago. Candidates should prepare for emerging profiles, such as:

- Autonomous Systems Configurators

- AI-Enabled Logistics Planners

- Model Risk Analysts for Defense Scenarios

- Red Teamers for AI Safety and Adversarial Testing

Tactic: Monitor job descriptions in AI-intensive defense programs (e.g., JAIC, Army Software Factory) to spot early signals of new role archetypes.

3. Build Cross-Domain Awareness

Defense AI roles span space, cyber, air, land, and sea. The most competitive candidates are those who can translate AI capabilities across multiple mission domains.

- Study real-world case studies—like AI in submarine navigation or predictive cyber risk modeling for satellite networks.

- Understand how AI integrates with legacy platforms and secure networks.

Tactic: Follow technical briefings, defense contractor blogs, and simulation tools that visualize AI deployment across domains (e.g., DoD’s AI toolkit, Lockheed’s iLab updates).

4. Strengthen Ethical and Security Postures

Candidates must go beyond “can I build this?” to ask “should we deploy this?” Defense organizations increasingly prioritize AI explainability, robustness, and ethical deployment—and will hire for it.

- Gain familiarity with frameworks like NATO’s Principles of Responsible AI, IEEE’s Ethics of Autonomous Systems, or the DoD’s AI Ethical Principles.

- Be comfortable with secure coding, model validation, and adversarial testing.

Tactic: Contribute to or audit open-source AI models in defense-aligned contexts and participate in AI security competitions like Capture-the-Flag (CTF) events focused on adversarial ML.

5. Signal Adaptability, Not Just Experience

AI in defense is evolving rapidly; employers are prioritizing learning agility over static experience. Candidates who demonstrate a growth mindset and systems thinking will stand out.

- Document experience building, fine-tuning, or evaluating AI in constrained or novel environments.

- Highlight cross-functional collaboration: e.g., working with operators, ethicists, or field testers.

Tactic: Build a portfolio that goes beyond GitHub—include mission-driven narratives of how your AI work supports tactical goals, operational resilience, or ethical decision-making.

What Do Current Signals Say?

Conversations point to a sharp rise in demand for roles anchored in AI ethics, model auditing, and cross-domain application of AI in defense. Employers are struggling with clearance delays—80% of roles require them—and seeking defense talent who can hit the ground running. Certifications like CompTIA Security+ and project portfolios built around mission-relevant challenges (e.g., predictive maintenance, autonomous systems, or cybersecurity AI for threat detection) are becoming key differentiators. What matters is how quickly you can learn, adapt, and lead with accountability, an essential marker of AI readiness.

Conclusion: Not Just Prepared, But Preparing

Defense has never been about certainty. It’s always been about readiness. And AI doesn’t change that. It just asks harder questions, faster.

What happens when the battlefield thinks back? When the system suggests before the commander speaks? When judgment must be trained, translated, and transmitted into code?

The answers won’t come from just better tools or smarter algorithms. They’ll come from how well institutions can evolve without losing their soul. And how well people can move with machines, without letting them lead.

In this new terrain, the winners won’t be the most advanced. They’ll be the most aligned.

Is Your Talent Strategy Future-Ready?

As AI reshapes the very definition of readiness, industries like defense are becoming testbeds for what’s next in hiring, roles, and workforce agility. At VBeyond Corporation, we help forward-looking organizations stay ahead—by spotting role shifts early, building adaptive pipelines, and aligning talent with tech transformations.

Let’s talk about building tomorrow’s teams, today.

Partner with us to reimagine how and who you hire.

FAQs

1. What is AI readiness, and why does it matter in defense hiring?

AI readiness refers to an organization’s capability to adopt, integrate, and scale AI technologies effectively, especially in high-stakes environments like defense. It’s not just about tools; it’s about having the right people with technical, ethical, and mission-oriented skills to operationalize AI systems.

2. What types of AI roles are in highest demand in the defense sector?

Roles in autonomous systems engineering, cybersecurity AI, AI ethics, predictive analytics, and model risk auditing are increasingly sought after. Hybrid roles like AI-ISR fusion officers and mission-integrated AI designers are also emerging.

3. How does your staffing firm address the clearance and compliance challenges in defense hiring?

We specialize in recruiting security-cleared talent and navigating the complex clearance timelines. Our pre-vetted candidate pipelines include professionals already eligible or cleared, minimizing delays in mission-critical roles.

4. Can you support workforce transformation and upskilling for AI integration?

Yes—beyond staffing, we help organizations plan talent architecture, identify AI readiness gaps, and connect with training and upskilling programs aligned to defense standards (e.g., Zero Trust, TensorFlow).

5. What makes AI talent recruitment different in the defense sector?

Unlike commercial tech, defense AI roles require a mix of technical depth, mission fluency, ethical awareness, and often security clearance. We specialize in sourcing this unique blend of skills across global markets.

6. How do you stay ahead in the global AI talent race for defense?

We track global hiring signals, emerging role archetypes, and clearance availability in real time. Our sourcing tools and partnerships allow us to build adaptive, future-proof pipelines in a rapidly evolving AI in defense landscape.